In my opinion mobile responsiveness is one of the most important aspects when developing apps but most developers neglect or are unaware of.

In this post I will show you how to improve responsiveness when loading pages to present to the user.

We will focus this post on Android (Xamarin Forms), but I’m sure that even if you are not a Xamarin developer, the problem is the same in other mobile technologies, so does the approach to solve it, so keep reading.

I chose Android instead of iOS for this post, not because I don’t think iOS devices are free of performance implications, but mostly because Android apps are much more limited in terms of the hardware resources that the operating system allows it to consume, so the better using the resources, the better the app will be.

As most Xamarin Forms developers knows, any Xamarin App has room for performance improvements like choosing the the correct layout for the pages, avoiding too much renderers, enabling XAMLC compilation, never mixing scrollview with other scrolled-based views like ListView, among other things. I won’t focus this post on that because there is a lot of great articles on the internet explaining how to overcome this issues.

The problem

When hunting for bottle necks in one of my apps – Nesus – two things were brought to my attention when testing navigation between page’s:

- Deserialization process

- Fetching of remote data

Most of the pages that a mobile app displays has to show data that is fetched from a remote source and presented to the user.

From the developer perspective, the only point to load data to present to the user is during the page load or right after the page is fully loaded, in the case of Xamarin Forms Apps, in the PageLoad event or OnAppearing event respectively.

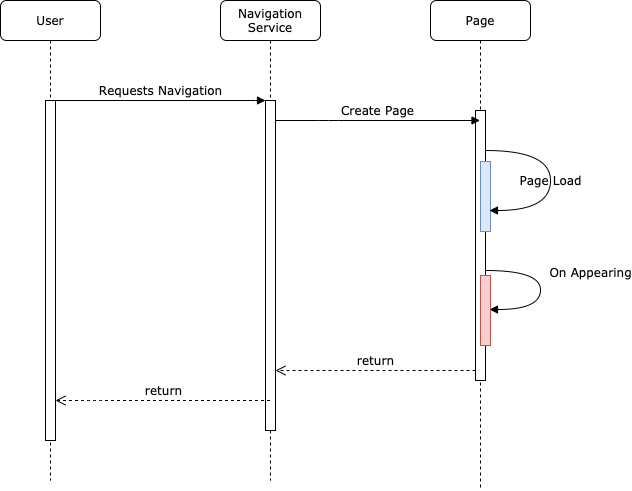

In a very simplistic way, every time the user requests to navigate/open a new page, the following occurs:

In a scenario like this, to improve responsiveness, we would normally count on caching mechanisms like MonkeyCache or the great Akavache to avoid unnecessary round-trips back-and-fourth on the back-end services.

It doesn’t matter if your data is already on your app’s local disk or on a local SqLite database, will need to deserialize data back to memory so you can consume it.

Even if you use Akavache Extensions methods like GetOrFetchObject() or GetAndFetchLatest() the deserialization process will take place.

Due to that, every time a page is loaded, even if the data is already on your local repository, it has be de-serialized back to memory be finally consumed on your mobile app.

Solution

Short Answer:

Pre-Load your data to memory before the user decides to navigate to the page that needs that data, and in the PageLoad() or OnAppearing() events just fill the UI views with the in-memory data your previously loaded.

Long Answer:

Although the solution to this problem is simple, it increase very impressively the page loads of your app.

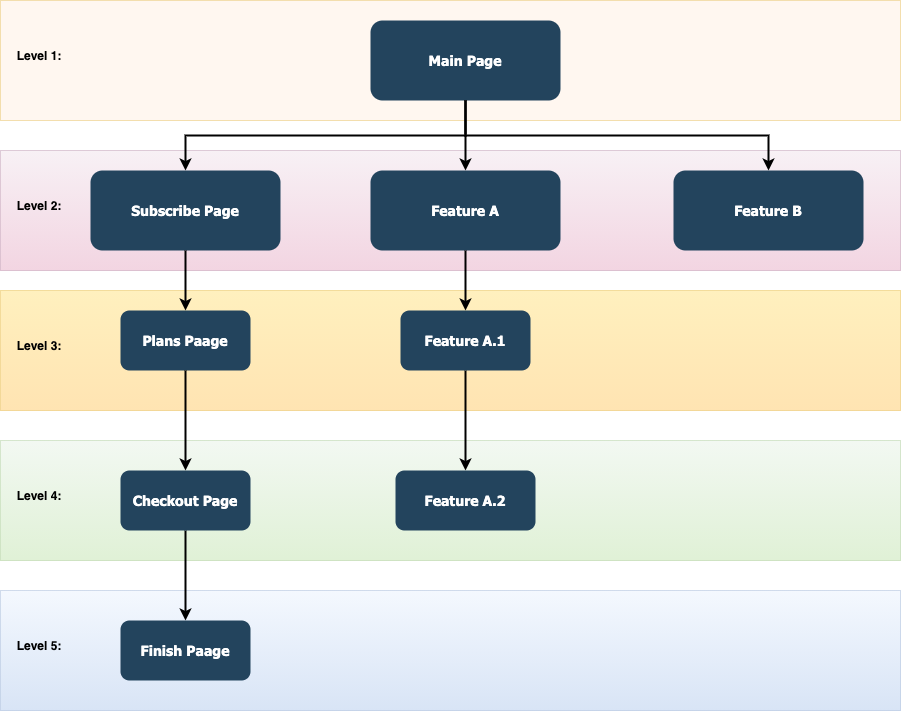

My solution is to pre-load data of the next-immediate level of page in the App, as you can see in the image below:

Each dark-blued Box represents a page in the App and the arrow represents the direction in which the user can navigate to.

As soon as the user navigate on each “Level” in the app, we load the data for the next pages that is more likely the user to navigate to.

Let’s say user is on “Level 1”, we must force the page’s data of the Next Level (Level 2) to be pre-loaded so that when the user requests any page of that Level 2, the app already have the required data in memory to fill in the Page.

Note: Each preloaded data must remains in the App’s memory for the shortest period possible, so you don’t risk your app to get an OutOfMemoryException or other kinds of Exceptions related to the app consuming too much memory.

The Code

I’ve created a PreLoader library called Xambon.PreLoader which is available on my GitHub please fell free to download and use it.

Hope this post is useful for you and other developers.

Thanks for you time! 😀